It all started in 1952, when strangely composed, anonymous love letters started appearing on the noticeboard of Manchester University’s Computing Department.

To everyone’s surprise, the love-struck author turned out to be the recently acquired Manchester Mark 1 computer (1948). Maths Professor Christopher Strachey (1916–1975) had written a program that randomly generated amorous messages from a predefined selection of words. It was the start of a beautiful relationship between man and machine.

Strachey’s Love Letters were followed 10 years later by the first video game, Spacewar! (1962), and in 1963 Frieder Nake, Georg Nees and Michael Noll laid claims to being the first computer artists, but the first truly digital decade was the 1970s.

In 1971, a small company in California won the contract to create a microchip that would fit inside a Japanese pocket calculator. Instead of hardwiring the functionality into individual circuits, they created a programmable chip — for the first time allowing all the calculations to be performed by a single, integrated circuit. The company was Intel and they had invented the microprocessor, the technology that would spark the digital revolution.

The following year (1972) Magnavox launched the Odyssey, the first home video game console, and Atari created Pong, the first video arcade game. Digital technology was no longer just about efficiency, it had become entertainment. This did not escape the attention of author turned film director, Michael Crichton (1942–2008), who approached computer animation pioneer John Whitney (1917–1995) for help with his first Hollywood film, Westworld (1973). Whitney turned the job down but his son, John Whitney Jnr, took on the challenge. Working with Information International Inc. (III), he proceeded to create Yul Brynner’s (1920–1985) pixellated robot vision. It was the first computer-generated image to appear in a film.

John Whitney Snr also had an indirect hand in the next CGI milestone. His long-term collaborator, Larry Cuba, created the Death Star briefing sequence in Star Wars (1977), the first extended use of CGI in a film. George Lucas knew a good idea when he saw one and with the money he earned from Star Wars he set up the company that became Pixar, taking CGI to infinity and beyond.

The same year Star Wars came out Apple launched the Apple II, the first mass-produced home computer. However, the story of home computing started two years earlier in 1975 when American company Micro Instrumentation and Telemetry Systems decided to sell a home-assembly kit for a computer. Such was the excitement their Altair 8800 was featured on the cover of Popular Electronics magazine, before it was even finished, prompting MITS to hire two Harvard students to write an operating system, fast. Those two students were Paul Allen and Bill Gates, who went on to create DOS, which would form the basis of the Microsoft empire for decades to come.

In 1976, a group of Altair owners in the San Francisco Bay Area organised the Homebrew Computer Club. One of the members was Steve Wozniak, then aged 26. He shared his designs for a home computer at the club and asked fellow members for advice. Steve Jobs gave him some, “Don’t give your ideas away for free.” The pair formed Apple, marketing Wozniak’s computer as the Apple I. Wozniak’s next breakthrough was to create a variation of Pong written as software, rather than hardwired, he called it Breakout. With the launch of the Apple II the following year, which incorporated sound and had a colour screen, here was a computer designed to be able to play Wozniak’s new game. Hot on the heels of the Apple II came the Commodore Pet and the Tandy TRS-80. All three home computers went on to sell in their millions and became known as the 1977 Trinity.

As the Seventies drew to a close, computer scientists and hobbyists started to think of new ways to use these incredible new machines. In 1978 a team of researchers at the Massachusetts Institute of Technology strapped a camera to the top of a Jeep and drove it around the town of Aspen, taking photographs at 10-feet intervals. The Moviemap they created pre-dated Google’s mass-photography project, Street View, by almost 30 years. Also in 1978, Roy Trubshaw, a student at Essex University, created an online role-playing game called Multi-User Dungeon, MUD for short. Trubshaw had invented multi-player gaming.

The digital revolution was now unstoppable and its unremitting flow into every part of our lives was under way. The first digital instrument appeared in Australia in 1979. Programmable synthesisers had been available before this, but Peter Vogel’s Fairlight CMI was the first to store sounds as digital files. Early customers included Peter Gabriel, Herbie Hancock and Stevie Wonder. Roger Linn’s digital drum machine, the Linn LM-1, soon followed. Bands like the Yellow Magic Orchestra, Landscape and the Human League were amongst the first to embrace this use of digital technology, but no genre made better use of these new tools than hip hop. Recording artists like Afrika Bambaataa, Grandmaster Flash and Run DMC sampled, remixed and exported African-American culture to the world.

As hip hop went mainstream so did the PC. British engineer Clive Sinclair gave the world a home computer for under £100, Andy Warhol painted Debbie Harry using an Amiga 1000 (1985) and Apple launched the Macintosh (1984), asking us all to ‘think different’.

Parents around the world bought their children computers so they could learn how to program. Most just played games. Millions of hours were spent playing computer games like Manic Miner (1983), Elite (1984) and the Legend of Zelda (1986) but this was not wasted time. Those children grew up to form computer game companies like id Software, who took us from Wolfenstein 3D (1992) to Quake (1996) in four short years.

By the mid-’90s, thanks to the user-friendly nature of the Mosaic browser (1993), the World Wide Web had opened up beyond the world of computer geeks and academics. While US dot-com companies seized on the commercial opportunities offered by the web, Eastern Europe was crucial to its artistic development. Artists like Olia Lialina and Alexei Shulgin were hugely influential, demonstrating the power and authenticity of non-linear narratives. Lialina’s My Boyfriend Came Back from the War (1996) is uncomfortably real, while Shulgin’s Form Art (1997) graphically reveals the evolving, life-like nature of technology.

The web gave artists operating outside the traditional art world access to a global audience. Free from the commercial world of galleries and art institutions, Net Art was a reaction against the cultural elite and its lack of marketability made it all the more authentic. Artists and designers like Aram Bartholl, Daniel Brown and Rafaël Rozendaal continue the spirit of Net Art, but things have changed. Net Art is no longer about internet culture, it’s about the impact of the internet on culture.

Digital Archaeology is not just a nostalgic trip into our digital past. Like all archaeological projects it seeks to plug gaps in the historical record, documenting, restoring and preserving lost, neglected and vulnerable work.

Tragically, this covers most work that is born digital. The fact that digital content is so easy to duplicate means that copies are not valued. Worse, the original version is often also considered disposable. Combined with the rapid obsolescence of digital formats, many experts predict that the second half of the 20th century will become a digital dark-age. Until we discover the digital equivalent of acid-free paper, bits and bytes are extremely fragile.

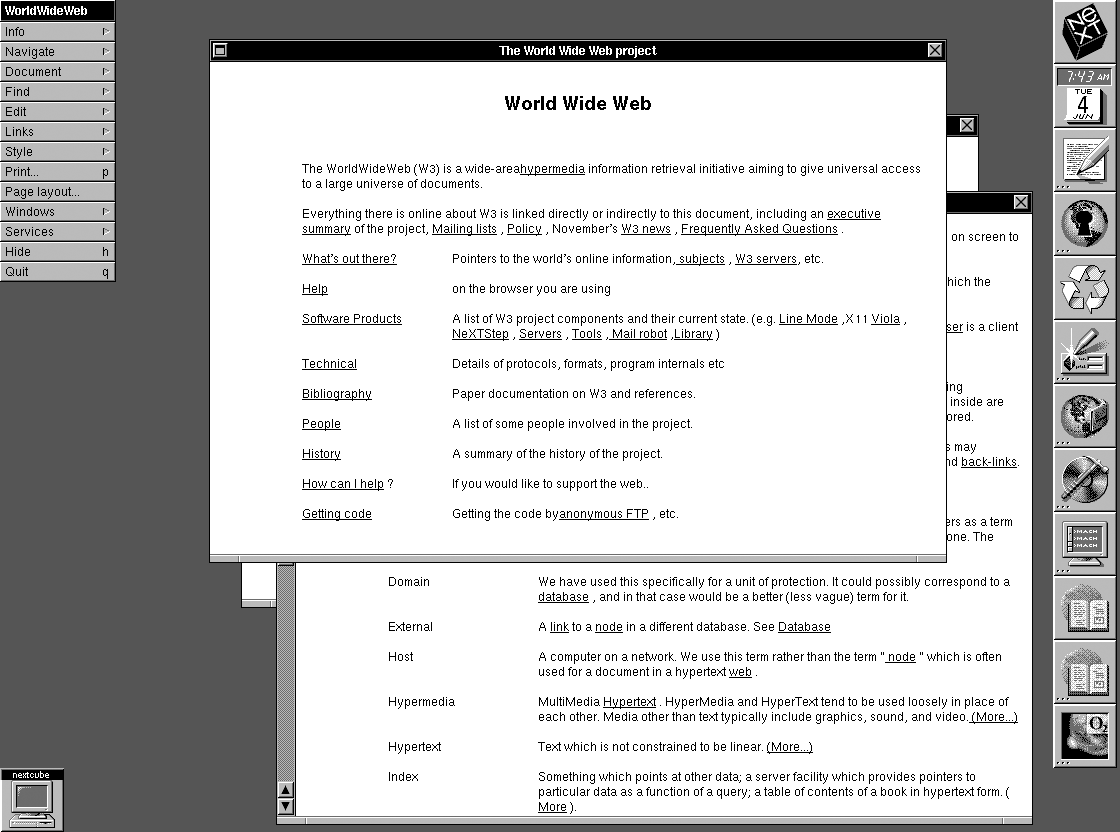

The story of the first web page is typical, first published in August 1991, the page was continually overwritten until March 1992. Whilst 48 copies of the Gutenberg bible are known to exist, printed over 500 years ago, no evidence of the first web page survives, not even a screenshot.

This is not an isolated case. Take the work of art collective Antirom, formed in London in 1994 as a protest against multi-mediocrity. Led by interactive pioneer, Andy Cameron, they had the radical vision to explore interaction as a form of media in its own right, rather than simply an interface to existing content. Their work largely exists on a redundant format, the CD-ROM.

Looking back further, the challenges become more apparent. Even where the code of early video games and computer art has been preserved, they can no longer be seen in their original environment. Archaic mainframe computers and early consoles are inoperable. This is where we can make a distinction between archaeology and archiving, archaeology examines the viewing problem. A book displays itself, computer art, video games and websites are invisible without the appropriate software and hardware.

Digital Archaeology explores these forgotten roots and offers alternative histories. The stories of the engineers and entrepreneurs who invented and exploited digital technology have been told but the stories of the artists and designers who shaped our digital world are often unknown.

The last 60 years has seen the birth and rise of digital technology at an incredible pace. We have witnessed a revolution, equal in magnitude to the transition to the modern world from the Middle Ages. We have a responsibility to expose this artistic digital history, the building blocks of modern culture, to future generations – an audience who will be unable to imagine a non-digital world.

This is an extract from the Digital Revolution catalogue, available on Amazon.